Highlights:

- We review recent evidence findings for Family Connects, a home visitation program for families with a newborn child, as reported in a recent Future of Children policy brief (Princeton/Brookings).

- The policy brief notes that Family Connects has been evaluated in two randomized controlled trials (RCTs), discusses the positive findings of the first RCT, and claims that the second (replication) RCT produced “similar results” regarding program impact.

- We believe both RCTs were well conducted, and commend the researchers for seeking to replicate their original findings.

- Our concern: The claim of successful replication is not accurate. The second RCT did not find an overall pattern of superior results for the Family Connects group compared to the control group on the study’s primary outcomes. Indeed, the second study found a statistically-significant adverse effect on child emergency medical care utilization (a 19 percent increase in the first two years of life, p=0.01).

- Such misstatement of the evidence—an all-too-common problem in the evaluation literature— could easily lead policy officials to implement Family Connects in the mistaken belief that its effects have been replicated when the opposite is true and there is genuine reason to doubt its effectiveness.

- A response from the authors of the policy brief, and our rejoinder, follow the main report.

- As in all Straight Talk reports, we discuss the accuracy of reported evidence claims and express no opinion about the motives of the study authors.

Family Connects is a home visitation program for families with a newborn child, designed to increase child well-being by bridging the gap between parent needs and community resources. Originating in Durham County, North Carolina (where it was called “Durham Connects”), the program operates at 16 sites across the United States, where it aims to serve every family with a newborn in the community. Family Connects offers between one and three nurse home visits to each family beginning at about three weeks of age. During the visits, nurses use a screening tool to assess family needs and link the family to community resources as needed. This is a “light-touch” (as opposed to intensive) home visiting program that costs just $500 to $700 per family. The Family Connects website provides further details on the program’s components and goals.

Family Connects has been evaluated in two randomized controlled trials (RCTs), both conducted in Durham County. The first RCT, whose analysis sample comprised 531 families, found that the program produced a sizable, statistically-significant reduction in child emergency care utilization during the first year of life. The research team, to its credit, then sought to conduct a second (“replication”) RCT, to see whether the promising findings in the first RCT could be reproduced in a new sample of families in Durham County. As we have discussed in a previous Straight Talk report, a successful replication RCT is essential to have confidence that a program truly produces positive effects, as a “false-positive” finding is not uncommon even in a well-conducted RCT, and the only way to convincingly rule it out is to reproduce the original finding in a new study.

Our team’s predecessor organization—the Coalition for Evidence-Based Policy—awarded a grant to the research team in 2014 to conduct the replication RCT, as part of a low-cost RCT initiative funded by the Laura and John Arnold Foundation, Annie E. Casey Foundation, and Overdeck Family Foundation. The study was well conducted, with a large sample (936 families), successful random assignment (as evidenced by highly-similar treatment and control groups), successful program implementation, and no sample attrition for the main outcomes of emergency medical care utilization and costs.

Here’s how lead researcher Kenneth Dodge, along with two co-authors, recently described the study findings in a policy brief accompanying the Spring 2019 issue of Future of Children (a collaboration of Princeton University and the Brookings Institution):

“To test whether the Family Connects program could be replicated, the Dodge team conducted it a second time, again in Durham. This replication involved slightly fewer parents and babies but produced similar results with regard to both the implementation and impact measures. The team also conducted a benefit-cost analysis, finding that every dollar invested in Family Connects saved a little more than $3 in spending on other intervention programs. Further analysis showed that in cities of similar size to Durham, with about 3,200 births a year, an annual program investment of $2.2 million would produce community health care savings of around $7 million in the first two years of children’s lives. Thus Family Connects passed the first major test on the route to becoming an evidence-based program with a wide reach. The two Durham trials showed that it could achieve impressive results with a broad array of families, and that the results could be replicated—a step that’s often a major problem with programs shown by a single study to produce impacts.”

What’s wrong with this statement? Put simply, it’s not accurate: The second RCT did not replicate the key results of the first RCT and, in fact, found an adverse effect on the key outcome of child emergency care utilization.

Let’s examine the specifics.

The main goals of the replication RCT are described as follows in the research team’s final study report, which is posted on Open Science Framework (as we request of all grantees):[1]

“The current study was designed to address two primary research questions:

1. Is random assignment to [the program] associated with lower mother emergency medical care utilization (emergency room visits and overnight hospital stays) and costs between child birth and age 24 months?

2. Is random assignment to [the program] associated with lower child total emergency medical care utilization (emergency room visits and overnight hospital stays) and costs between child birth and age 24 months?[2]” [italics added for clarity]

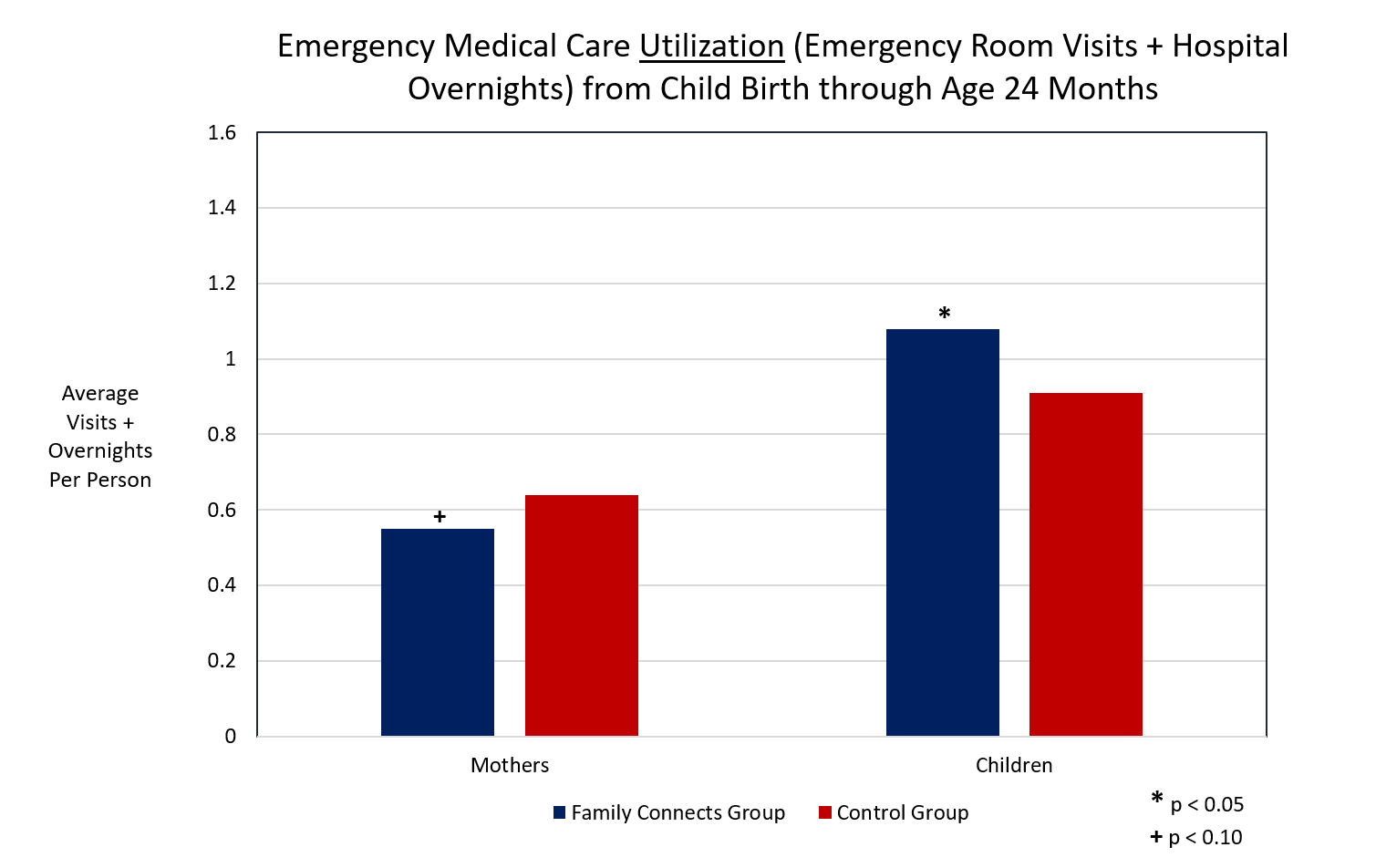

Here are the study’s findings for these two primary questions, per tables 2 and 3 of the final study report:

As you can see, the study did not find an overall pattern of superior results for the Family Connects group compared to the control group on the study’s primary outcome measures. Indeed, the only statistically-significant finding was an adverse effect on child emergency medical care utilization—specifically, a 19 percent increase in such utilization during the child’s first two years of life compared to the control group (p=0.01). [3]

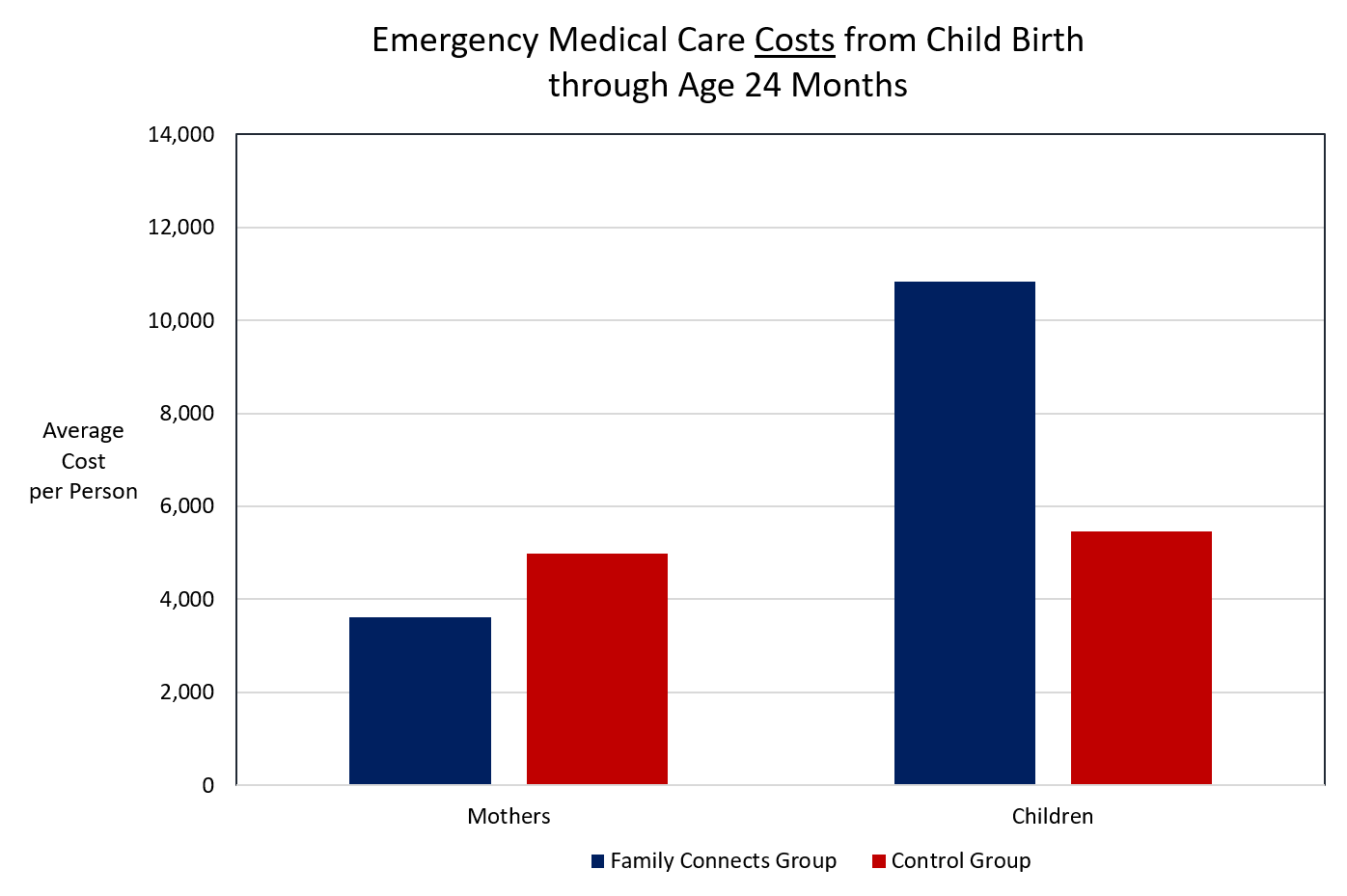

Thus, the claim in the Future of Children policy brief (as excerpted above) that the replication RCT produced “similar results” to those of the first RCT regarding program impact is not accurate. Also, contrary to the claim in the policy brief, the second RCT used a substantially larger—not smaller—sample of families to estimate the program’s effects than the first RCT (936 families versus 531), and at least in that respect was a stronger study. Finally, the policy brief’s claims of positive benefit-cost results and healthcare savings from Family Connects are based on solely on the first RCT[4] and not—as implied in the brief—based on both studies (indeed, as the second chart illustrates, a similar analysis of the second study would show program costs well exceeding the benefits).

In short, this is a case where evaluation findings that cast real doubt on the effectiveness of Family Connects are misstated as positive in a prestigious policy publication. Unfortunately, this is not an isolated example in the research literature; it is rather—as we have sought to illustrate in many Straight Talk reports—an endemic problem in the field of policy evaluation.

Such overstatement of evidence findings is not innocuous. In this case, it could easily lead state or local policy officials to adopt and implement Family Connects in the mistaken belief that its effects have been successfully replicated when the opposite is true and there is genuine reason to doubt whether the program produces beneficial effects for children and families. It also makes it far more difficult for policy officials to distinguish the relatively few early childhood programs that do have credible evidence of effectiveness from the many others with inflated or illusory evidence claims, so that such officials can focus public funds on approaches that will meaningfully improve children’s lives.

Consistent with Nobel laureate Richard Feynman’s entreaty to graduating Caltech students in 1974, we believe scientists have a special responsibility to present study findings in an impartial manner. We commend the research team for conducting a replication RCT when they could have rested on the laurels of the first study. But we would now encourage them to forthrightly acknowledge that the second study was unable to reproduce the key results of the first, and that Family Connects has not met an essential condition (replication) needed to have confidence that the program would produce beneficial effects if implemented more widely.

Response provided by Ken Dodge, Ron Haskins, and Deb Daro, authors of the Future of Children policy brief

We thank Jon Baron for the opportunity to respond to his concerns regarding the accuracy of our policy brief. While we agree that some comments relating to the Family Connects (FC) findings might have been stated more clearly, our essential message in the brief remains accurate: the adoption of universal approaches offers an empirically-promising avenue to improve family and child outcomes.

The body of evidence produced in three FC trials (two RCTs and a quasi-experiment) offers a more complete picture of the model’s potential than the one narrow analysis Jon considered. The specific concern raised by Jon is based on one sub-analysis in the second RCT that used de-identified administrative data and t-tested the difference between intervention-assigned infants and control-assigned infants on post-intervention emergency health-care episodes. Because of de-identification, the analysis could not include baseline covariates and included families that had moved out-of-county before post-intervention follow-up. As reported by Goodman and Dodge and posted by Family Connects and the Arnold Foundation, this analysis found more hospital overnights among intervention-assigned infants, but only for chronic illnesses (not accidents or injuries) and only between 12-24 months-of-age (not birth-12-months).

Subsequent analyses using identified data revealed that pre-treatment medical risk, defined by low birthweight, premature gestational age, birth and other complications, and in-utero substance exposure, had strong effects on post-treatment outcomes, and consenting intervention-assigned infants had 25% more of these risks than controls (p<.01).

Controlling for these baseline differences produced a different set of findings, as reported in the Dodge and Goodman chapter in the volume. These analyses indicate that in the second RCT, similar to the first RCT, FC nurses reached 76% of families, with high (90%) protocol adherence and strong (kappa = .75) agreement in scoring risk. Intervention-assigned families had some better outcomes: more community connections, lower rates of maternal anxiety/depression, and fewer emergency-room (ER) visits and lower ER costs among infants with one or more medical risks. They also had more hospital overnights for illnesses (but not injuries/accidents).

A third quasi-experiment conducted in four new communities further confirms these findings. Nurses reached 84% of families, with high (87%) protocol-adherence and strong (kappa = .78) agreement in risk-scoring. Impact analyses showed that intervention-assigned families had more community connections, higher father-infant relationship quality, and fewer emergency care episodes. The chapter also reports new analyses from the first RCT showing that at age 60 months, intervention-assigned families had 39% fewer Child Protective Services investigations for maltreatment.

We have learned that variation in specific findings exists for FC, as it does for all evidence-based programs. Our learning is not yet complete. As noted in the brief, we encourage communities implementing FC and other universal strategies to continue to conduct research on both implementation quality and outcomes. We hope that criticism of research based on incomplete information does not have the inadvertent effect of dissuading evidence-based program leaders from opening their programs to ongoing evaluation. Unlike a court of law that decides yes-or-no once-and-for-all, science proceeds through multiple studies that accumulate evidence to improve practice.

[The authors asked us to post a link to the following table from the Dodge and Goodman chapter mentioned above: Implementation and Impact Findings across Three Trials of Family Connects.]

Rejoinder by Arnold Ventures’ Evidence-Based Policy team

We thank the authors of the Future of Children policy brief for their response to our concern that the replication RCT of Family Connects found an adverse effect on the key targeted outcome of child emergency medical care utilization, and that their brief misstates the study’s findings as positive.

In their response, the authors state that a more positive set of findings on this key outcome, based on a different analysis, is reported in the Dodge and Goodman chapter of the Future of Children volume (link, pp. 41-59). However, that is not true. The Dodge/Goodman chapter reports findings on child emergency care utilization that are identical to those discussed in our Straight Talk report, but phrased such that a reader might miss the key point that the effects are adverse. Here is the chapter’s discussion of the second RCT’s findings on infant health and wellbeing, reproduced in its entirety:

“Infant health and wellbeing. Unlike the first trial, in which intervention infants fared better, in the second trial intervention and control infants had similar rates of serious, emergency medical care episodes between birth and six months. In the first trial, the intervention group had a mean of 1.5 episodes per family by 24 months of age, and the control group had 2.4. In the second trial, the intervention group’s mean was 1.1, which was lower than the mean in the first trial. Yet the control group mean was lower still, at 0.9 episodes. We have no explanation for the precipitous drop in these episodes among the control group. Involvement with child protective services hasn’t yet been evaluated for the second RCT.” [italics added]

Per the italicized sentence, over the study’s 24-month follow-up period, the Family Connects group had 1.1 emergency care episodes versus 0.9 episodes for the control group—the same adverse effect discussed in the Straight Talk report (see first chart in our main report). Although not mentioned in the above passage, this adverse effect was highly statistically significant (p=0.01). The linked table at the end of the authors’ response also shows this same adverse effect.[5]

In short, there is no ambiguity: The second RCT failed to produce the hoped-for effects on the study’s stated primary outcomes, and found a significant adverse effect on one of these outcomes (a 19 percent increase in child emergency care episodes).

To straighten the record on two other items in the authors’ response: (i) in the second RCT, the Family Connects and control groups were highly-similar at study entry in their key characteristics, including infant medical risk (see baseline table);[6] and (ii) the third “trial” described in their response and in the Dodge/Goodman chapter was not an RCT, but rather a pre-post study that examined child and family outcomes in four North Carolina counties before and after adoption of Family Connects (and is considered a very weak study design (link, p. 2).[7]

We appreciate the authors’ engagement in this discussion, and hope it is useful to readers.

References:

[1] The same goals were also set out at the study’s inception, in the research team’s pre-registered analysis plan.

[2] We corrected a typographical error in the second research question, as follows: “Is random assignment to [the program] associated with lower child total emergency medical care costs utilization (emergency room costs visits and overnight hospital costs stays) and costs between child birth and age 24 months?” This correction is consistent with the phrasing used in the study’s pre-registered analysis plan as well as the phrasing of the first research question.

[3] As shown in the first chart, the study found a trend toward lower emergency medical care utilization by mothers in the Family Connects group versus control group. This effect approached, but did not reach, statistical significance (p=0.09), and is therefore less reliable—i.e., more likely to be due to chance—than the adverse effect on child emergency care utilization (p=0.01).

[4] These same benefit-cost results are reported in the research team’s 2014 publication of the results of the first RCT.

[5] In the table, second row from bottom, “I>C” for RCT II indicates significantly more child emergency care episodes for the intervention group than the control group.

[6] The baseline imbalance in infant medical risk referenced in the authors’ response applies only to the subsample of families (comprising 39 percent of the full RCT sample) who consented after random assignment to the measurement of outcomes such as maternal anxiety through an interview. This subsample was not used to measure the study’s primary outcomes of emergency medical care utilization and costs, so the baseline imbalance in this subsample is not relevant to the study’s primary findings.

[7] Because this type of study measures outcomes for children and families before and after the counties’ adoption of Family Connects, but does so without reference to a group of control or comparison counties, the study cannot answer whether any changes observed in these outcomes over time would have occurred anyway, even without adoption of Family Connects.